Goals:

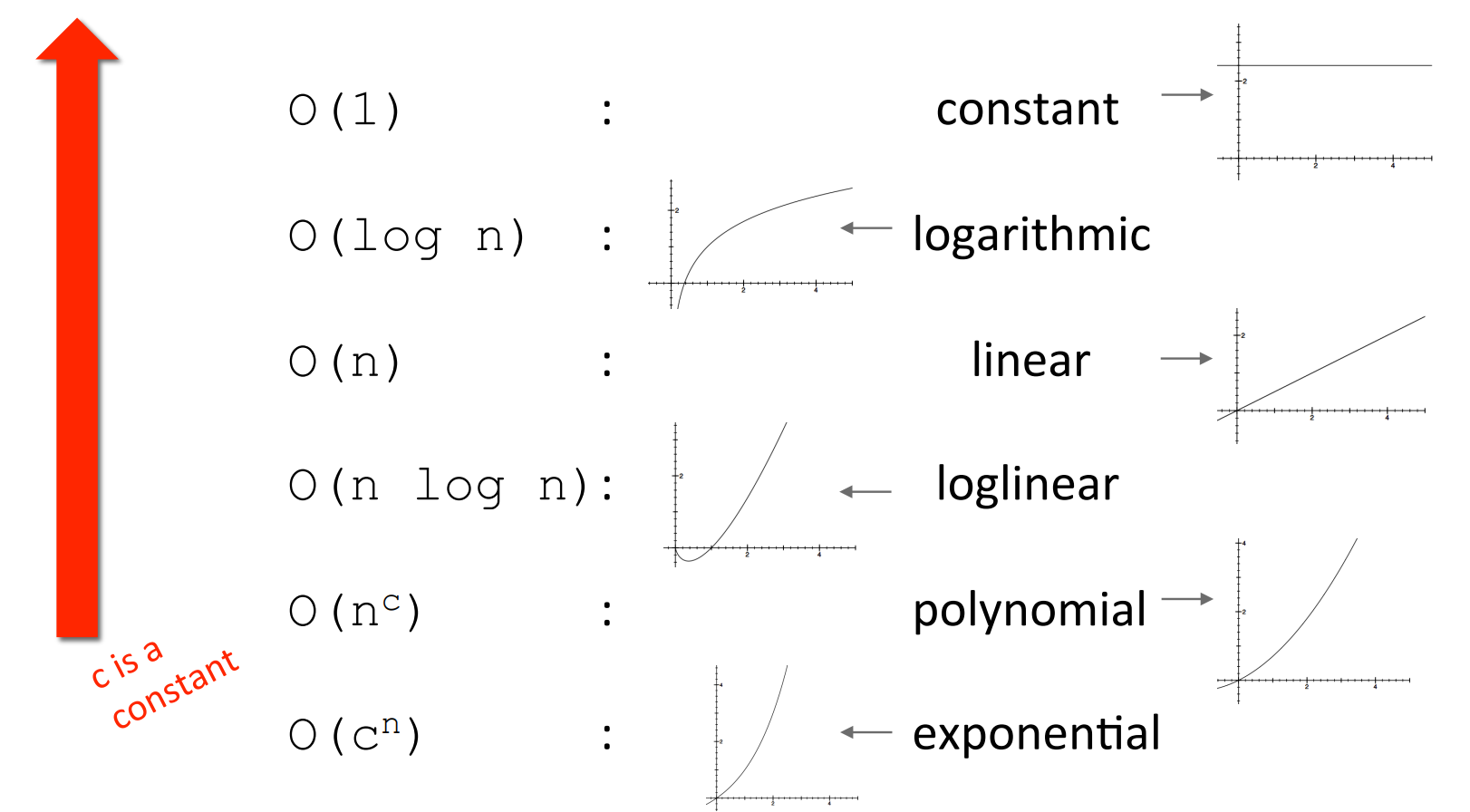

Complexity classes in increasing order:

| Class | n=10 | n=100 | n=1000 | n=1000000 |

| O(1) | 1 | 1 | 1 | 1 |

| O(log n) | 1 | 2 | 3 | 6 |

| O(n) | 10 | 100 | 1000 | 1000000 |

| O(n log(n)) | 10 | 200 | 3000 | 6000000 |

| O(n^2) | 100 | 10000 | 1000000 | 1000000000000 |

| O(2^n) | 1024 | 1.2*10^31 | 1.07*10^311 | forget it! |

Constant complexity class

Logarithmic complexity class

Searching in a list

def search(L, e):

for i in range(len(L)):

if L[i] == e:

return True

if L[i] > e:

return False

return False

This method is called a sequential search.

Self-similarity: find BigO of smaller code blocks and combine them The BigO pseudocode does not cover function calls and some other cases (to keep the discussion simple) --- though experiment to expand this

findBigO(codeSnippet):

if codeSnippet is a single statement:

return O(1)

if codeSnippet is a loop:

return number_of_times_loop_runs * findBigO(loop_inside)

for codeBlock in codeSnippet:

return the_sum_of findBigO(codeBlock)

for i in range(N * N):

for j in range(3, 219):

print("sum: ", i + j)

print("Enjoy COL100");

Show the working of findBigO on this code snippet.

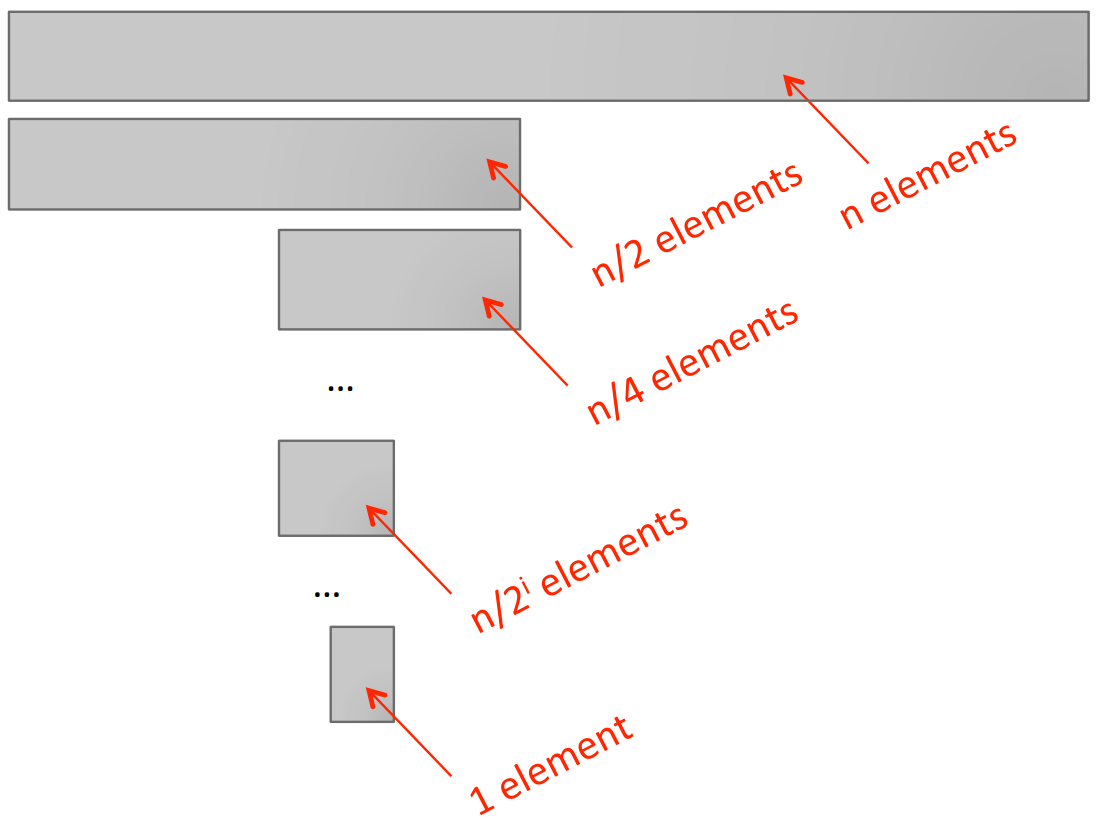

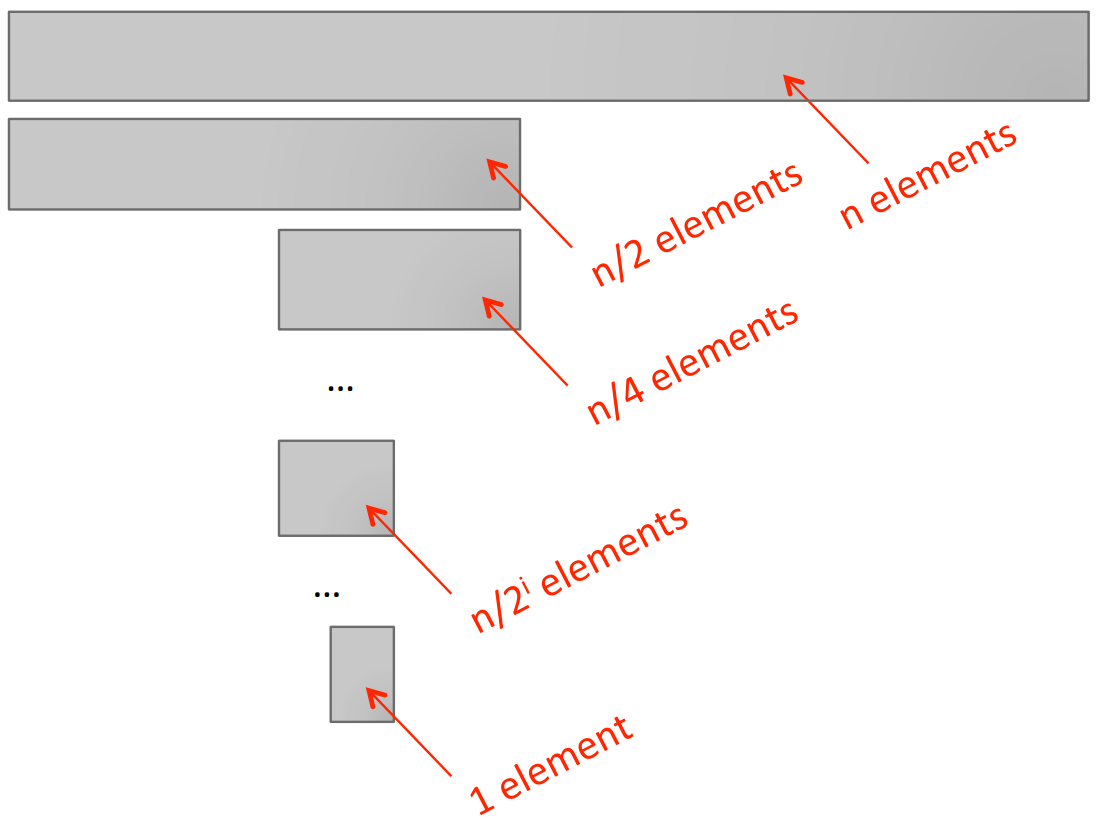

Binary search

e in list LL[i] == eL[i] is larger or smaller than eL for e.Binary search complexity analysis

log n.O(log n) where n is len(L).Binary search implementation 1

def binsearch1(L, e):

if L == []: # this check and return is constant-time-complexity, or O(1)

return False

elif len(L) == 1: # this check is also O(1)

return L[0] == e # this check is also O(1)

else:

half = len(L)//2 # constant O(1)

if L[half] > e: # Is L[half] O(1)? Let's assume for now it is.

return binsearch1(L[:half], e) # involves copying the list, not constant

else:

return binsearch1(L[half:], e) # involves copying the list, not constant

Complexity of binsearch1:

O(log n) calls to binsearch1

n, in worst case in case of range of size 1 when n/2k=1, or k=log nO(n) for each binsearch1 call to copy list:

O(log n)*O(n) indicates that the complexity can be as high as O(n log n).O(n/2i). Thus, the total cost of copying is n + n/2 + n/22 + ... + 1, which is equal to 2n.A better implementation of binary search

n=len(L).

def binsearch_helper(L, e, low, high):

if high == low:

return L[low] == e

mid = (low + high)//2

if L[mid] == e:

return True

elif L[mid] > e:

if low == mid: #nothing left to search

return False

else:

return binsearch_helper(L, e, low, mid - 1) #constant to set up the call. The actual call takes longer

else:

return binsearch_helper(L, e, mid + 1, high) #constant to set up the call. The actual call takes longer

def binsearch(L, e):

if len(L) == 0:

return False

else:

return binsearch_helper(L, e, 0, len(L) - 1) #constant to set up the call. The actual call takes longer

Complexity of binsearch:

O(log n) calls to binsearch_helper

n, in worst case in case of range of size 1 when n/2k=1, or k=log nO(1) for each binsearch_helper execution (including the cost to set up the call)O(log n)*O(1) indicates that the complexity is O(log n).Another example of logarithmic complexity: int2str

def int2str(n):

digits = '0123456789'

if n == 0:

return '0'

result = ''

i = n

while i > 0:

result = digits[i%10] + result

i = i//10

# can you place an assertion here?

return result

What is the correctness argument? What assertion can you put at the end of the body of the while loop? Ans: assert(10**(len(result))*i+int(result)==n). This is an invariant.

Analyzing the complexity of the program above:

n by 10 such that it remains greater than 0?O(log(n))O(1)O(len(result)) as it involves a copy, which is O(log n) in the worst case and average case.O(log n).O(log2 n).How can you change the implementation so it takes O(log n) time?

result be a list, initialized to [] and appended-to using the append() function (which is O(1))O(len(result))) and join it to form a string (again O(len(result))).Linear complexity

Analyzing the complexity of an iterative implementation of the factorial function

def fact_iter(n):

prod = 1

for i in range(1, n+1):

prod *= i

return prod

O(n). n times around loop, constant time each time.Analyzing the complexity of a recursive implementation of the factorial function

def fact_rec(n):

""" assume n >= 0 """

if n <= 0:

return 1

else:

return n*fact_rec(n-1)

O(n) because the number of function calls is linear in n, and constant effort to set up call.Log-linear O(n log n) complexity

Polynomial complexity

isSubset function:

def isSubset(L1, L2):

for e1 in L1:

matched = False

for e2 in L2:

if e1 == e2:

matched = True

break

if not matched:

return False

return True

Exponential complexity

Complexity of Towers of Hanoi

tn denote time to solve tower of size n.tn = 2tn-1 + 1 = 2(2tn-2 + 1) + 1 = 4tn-2+ 2 + 14tn-2+ 2 + 1 = 4(2tn-3+1)+2 + 1 = 8tn-3 + 4 + 2 + 1k expansions: 2ktn-k+2k-1 + 2k-2 + ... + 4 + 2 + 1n-k=1: 2n-1 + 2n-2 + 2n-3 + ... + 4 + 2 + 12n-1.2n.Exponential complexity example: Power set

{1, 2, 3, 4} would generate (order does not matter):

{}, {1}, {2}, {1,3}, {1,4}, {2,3}, {2,4}, {3,4}, {3}, {4}, {1,2}, {1,2,3}, {1,2,4}, {1,3,4}, {2,3,4}, {1,2,3,4}

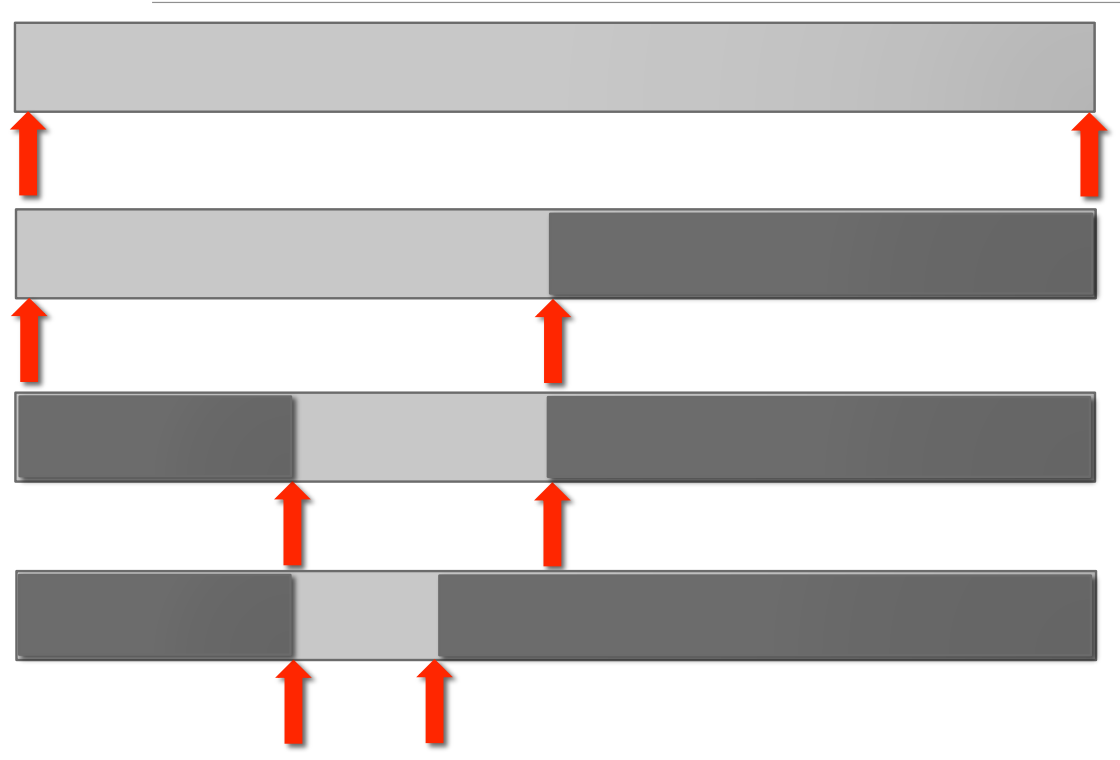

Power set --- concept

n); and all of those subsets with n

added to each of them belong to the bigger power set.Exponential complexity

def genSubsets(L):

res = []

if len(L) == 0:

return [[]] # list of empty list

smaller = genSubsets(L[:-1]) #all elements without last element

extra = L[-1:]

new = []

for small in smaller:

new.append(small+extra) # for all smaller solutions, add one with last element

return smaller+new # combine those with last element and those without

Assumptions:

append() is constant timesmall+extra can be O(n) time.k, the solution is of size 2k.tn denote time to solve problem of size n.sn denote size of solution to a problem of size n.tn=tn-1+c3*n*sn-1+c1*sn-1+c2 (where c1,c2,c3 are some constants)tn>=tn-1+c1*2n-1+c2>=tn-2+c1*2n-2+c1*2n-1+2*c2>=tn-k+c1*2n-k+c1*2n-k-1+...+2n-1+k*c2>=t0+c1*20+c1*21+...+c1*2n-1+n*c2>=1+c1*2n+n*c2>O(2n)Summary of examples of complexity classes

O(1): code does not depend on size of the inputO(log n): reduce problem in half with constant effort at each stepO(n): simple iterative or recursive programs with a single loop or linear recursionO(nlog n): will see next timeO(nc): nested loops or recursive callsO(cn): multiple recursive calls at each level, where the number of levels is linear in nExample: Iterative Fibonacci

def fib_iter(n):

if n == 0: # O(1)

return 0 # O(1)

elif n == 1: # O(1)

return 1 # O(1)

else: # O(1)

fib_i = 0 # O(1)

fib_ii = 1 # O(1)

for i in range(n-1): # the loop executes O(n) iterations

tmp = fib_i # O(1)

fib_i = fib_ii # O(1)

fib_ii = tmp + fib_ii # O(1)

return fib_ii # O(1)

Analysis:

O(1)c1*O(1) + O(n)*c2*O(1) = O(n)

Example: Recursive Fibonacci

def fib_rec(n):

""" assumes n an int >= 0 """

if n == 0: # O(1)

return 0 # O(1)

elif n == 1: # O(1)

return 1 # O(1)

else: # O(1)

return fib_rec(n-1) + fib_rec(n-2)

Draw tree. Show the growth for small values of n. Claim (without proof) that the complexity is exponential, slightly lower than 2n.

Big-O summary

Lists: n is len(L)

Dictionaries: n is len(d)